Have you ever stopped to think about how self-driving cars actually see the world around them? It's a pretty fascinating question, isn't it? For a while now, folks have been talking a lot about something called BEV, which stands for Bird's Eye View, and also about Transformer networks. These two ideas, you know, are getting quite a bit of attention in the world where cars drive themselves. It feels like everyone is curious about what they do and why they matter so much right now.

You see, when we talk about a car driving itself, it needs to gather a whole lot of information about its surroundings. Think about how you look around when you're walking or driving. You take in everything from above, from the sides, and from straight ahead. Cars try to do something similar, and that's where the idea of a bird's eye view comes into play. It's almost like giving the car an extra set of eyes that can look down from way up high, offering a different perspective on the road and everything on it.

This particular way of seeing things, the bird's eye view, combined with clever ways of processing all that visual data, is apparently shaping how these smart cars learn to move around safely. It's not just about seeing; it's about making sense of what's seen, and that's where the "vance" part, if you will, of this discussion comes in – how this technology is moving forward and helping these vehicles become more capable. We're going to chat a little about what BEV is all about and why it's such a big topic.

- Mookie Betts Information

- Is Dana Perinos Husband Ill

- Is John Travolra Gay

- Did Dr Phil Get Divorced From Robin

- Jonathan Taylor Thomas Died

Table of Contents

- What Exactly Is BEV Vance, And What Does It Do?

- How Does BEV Vance Gather Information About Its Surroundings?

- Connecting BEV Vance with Electric Vehicles

- Is BEV Vance the Key to Truly Autonomous Driving?

- Different Ways BEV Vance Sees the World

- The Special Layer in BEV Vance Systems

- What Comes After BEV Vance?

- The Future of BEV Vance and Big Models

What Exactly Is BEV Vance, And What Does It Do?

So, when we talk about BEV, or Bird's Eye View, in the context of self-driving cars, it's pretty much what it sounds like. It's a way for the car's computer to see the road from an overhead perspective, kind of like a bird flying high above. Now, some folks might wonder if this top-down look actually brings anything new to the table for how the car plans its moves or makes decisions. To be honest, from the very name, "bird's eye view," you might think it's just a different way of showing what cameras and other sensors already pick up.

The core idea here, you know, is that instead of seeing things from the car's direct eye-level, which can be tricky with things blocking the view or objects looking different from various angles, a bird's eye view tries to make everything look consistent. This perspective can help line up all the pieces of information the car collects, making it easier to figure out where things are in relation to each other on the road. It's a different way to organize the visual input, that's for sure. For example, a car's camera might see a person standing behind a bush, but from a bird's eye view, that person might be more visible, or at least their position relative to the road could be clearer. It's a way of making sense of the surrounding space in a unified manner.

While a bird's eye view might not, you know, create brand new abilities for a car to drive itself, it does offer a way to process information that can make other tasks simpler. It's about how the car's brain interprets the world, not necessarily about adding a new sensor. This approach, you see, is particularly helpful for understanding the layout of the road, where other cars are, and where pedestrians might be walking. It helps give a more complete picture of the immediate driving area, which is pretty important for safe operation. It's more about how the car processes and uses the existing data, rather than getting new types of data. This is where the "vance" aspect, the forward movement of this technology, really begins to show its promise.

How Does BEV Vance Gather Information About Its Surroundings?

So, how do these BEV systems actually collect all the necessary details to build that overhead picture? It's a pretty clever process, actually. Each BEV query, which you can think of as a little request for information, basically works in two ways to pull together spatial details. It uses something called spatial cross-attention to gather spatial features from the area around the car. This helps it understand where things are in space, you know, like how far away a car is or where a lane line sits.

But that's not all. It also has a way to collect information over time, using what's called temporal self-attention. This helps the system remember what happened a moment ago and connect it with what's happening now. It's a bit like remembering that the car in front of you was just moving, and now it's stopped. This process of gathering both spatial and time-related details happens over and over again. The idea is that by repeatedly bringing these two types of information together, they can actually help each other out, making the overall picture much clearer and more complete. This continuous refinement is a big part of how BEV systems, with their "vance" or forward thinking, improve their perception.

This method of repeatedly gathering and refining information, you know, is pretty vital for a car that's driving itself. Things on the road are always moving and changing, so having a system that can keep up with those shifts in real time is pretty essential. By combining what it sees right now with what it saw a moment ago, the car can make better guesses about where things are going and what might happen next. It's a dynamic way of building a picture of the world, rather than just taking a single snapshot. This ability to integrate information from different points in time is a key aspect of how BEV systems are advancing.

Connecting BEV Vance with Electric Vehicles

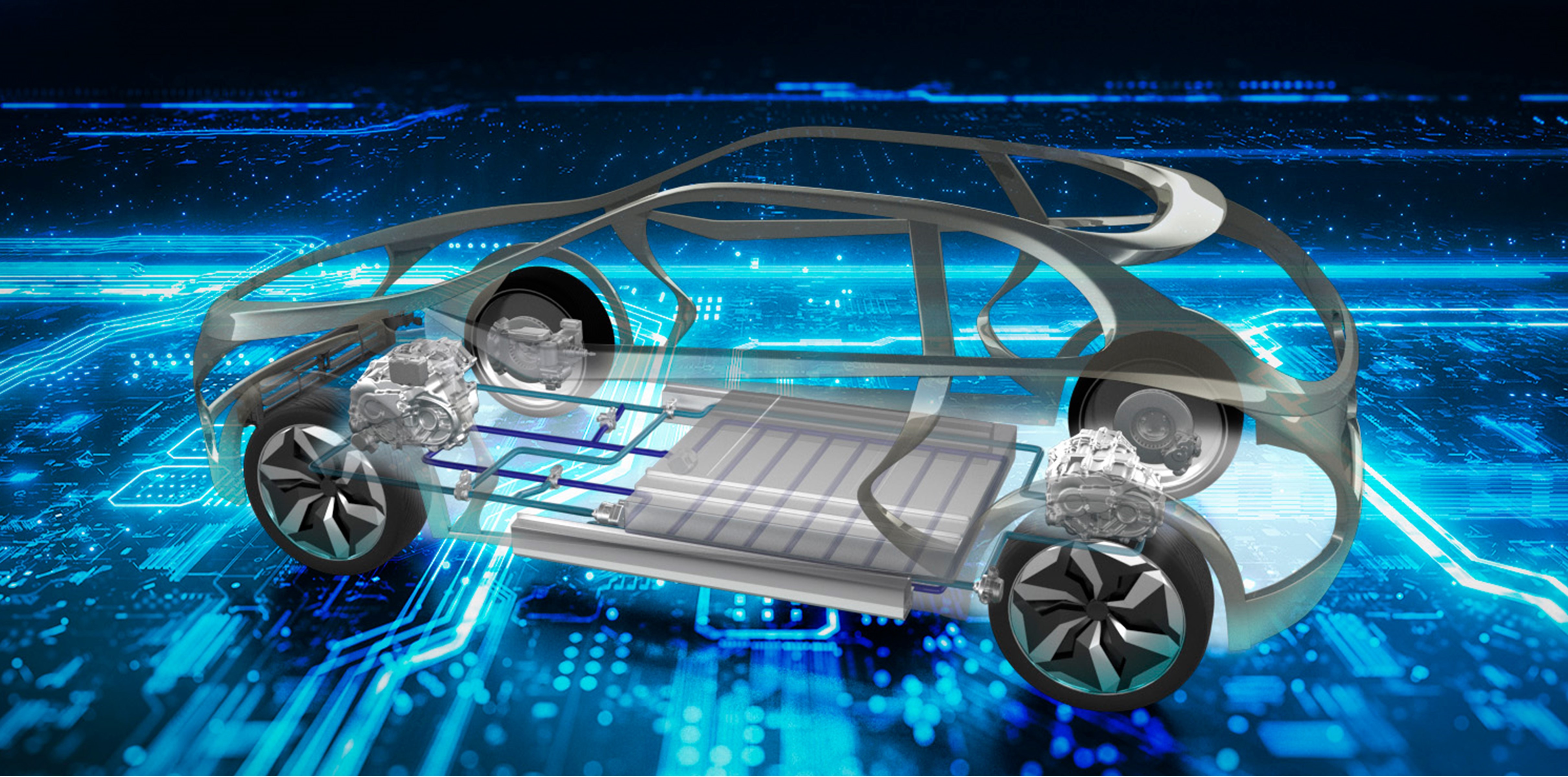

It's interesting to note that the term BEV also pops up in a completely different conversation, one about electric vehicles. When people talk about EVs, that's the big umbrella term for any car with an electric motor. But then you have BEV, which specifically means a Battery Electric Vehicle. This kind of EV, you know, runs purely on batteries and gets its power only by plugging into a charger. There's also PHEV, which is a Plug-in Hybrid Electric Vehicle, a bit different.

When it comes to picking an electric vehicle, it's not a one-size-fits-all situation, you know. It really depends on your own circumstances. For many, BEV and PHEV are often considered good choices. For instance, if you have a place to charge at home and you don't typically take very long trips, then a BEV might be a really good fit for you. It's a pretty straightforward choice for daily commutes and shorter journeys. This is a different kind of "vance," a forward movement in how we power our personal transportation, distinct from the self-driving technology but sharing the same acronym.

So, while the BEV in "BEV Vance" (referring to the self-driving technology) is about how cars see, the BEV in the electric vehicle discussion is about how they're powered. It's a bit confusing, but it's important to know the difference. The context usually makes it clear which BEV people are talking about. It just goes to show how some terms can have multiple meanings depending on the field, which is actually kind of common in technical conversations.

Is BEV Vance the Key to Truly Autonomous Driving?

Many folks are starting to think that the way self-driving technology is moving forward with BEV, this bird's eye view approach, could very well be the big opportunity for what's called an "end-to-end" self-driving system. What that means, you know, is a system where the car takes in all its sensor data and directly outputs how it should drive, without a lot of separate steps in between. It's a pretty ambitious idea, but BEV seems to offer a path to get there.

For example, in something called "SelfD," the people who created it used the BEV perspective to make all sorts of driving video data look the same, regardless of how it was originally captured. This helped them train models for planning the car's movements and making decisions. And in another system, called MP3, they brought together map information and sensor data, all within that BEV space. This allowed the car to, you know, learn how to understand its surroundings and figure out what to do next. It's all about creating a unified way for the car to understand its environment, which is a pretty big step forward for autonomous driving, truly a "vance" in the field.

The unique strengths of the BEV space, you see, are pretty clear. It helps simplify a lot of the complex information a self-driving car has to deal with. By putting everything into that consistent overhead view, it makes it easier for the car's computer to process and react. This ability to organize and standardize visual input is a pretty important piece of the puzzle for making self-driving cars more reliable and capable. It's a foundational element for the next generation of autonomous systems, moving things ahead quite a bit.

Different Ways BEV Vance Sees the World

When we talk about how BEV perception algorithms work, there are a few main ways they gather information to create that overhead picture. We often group them into different types. There's BEV LiDAR, which uses laser points to build a detailed 3D map of the surroundings. This is a pretty accurate way to get depth information, you know, and see objects even in challenging light conditions. It's like having a very precise measuring tape for everything around the car.

Then there's BEV Camera, which relies on images from regular cameras. This approach uses what the cameras see to create the bird's eye view. It's a bit more challenging because cameras don't naturally give you depth information in the same way LiDAR does, but it's also less expensive to implement. And finally, there's BEV Fusion, which, you know, combines data from different types of sensors, like cameras and LiDAR, to get the best of both worlds. This fusion approach tries to make the most complete and reliable picture possible by using multiple sources of information. It's a pretty smart way to get a comprehensive view, representing a significant "vance" in sensor integration.

Each of these methods has its own strengths and weaknesses, but the goal is always the same: to create that clear, unified bird's eye view of the environment. The choice of which method to use often depends on the specific needs of the self-driving system, as well as factors like cost and performance. What's clear is that the ability to translate various sensor inputs into a consistent BEV representation is a key part of how these systems are evolving. It's all about getting the most accurate and useful information for the car to make its decisions.

The Special Layer in BEV Vance Systems

Now, here's something pretty interesting about BEV networks. They have this special part in the middle, a feature layer, which is actually called the BEV layer. The neat thing about this layer, you know, is that you can pretty much look at it directly and understand what it means. It's not just a bunch of abstract numbers; it contains meaningful information about the world around the car, presented in that bird's eye view format. This is a pretty big deal because it makes it easier for people to understand what the system is "thinking."

When the system needs to make a final decision, like where to steer or how fast to go, it uses what's called a "task head" that's connected right after this BEV layer. Because the BEV layer itself holds such clear and interpretable information, the final results the system produces tend to be much better. It's like having a well-organized set of notes before you write an essay; the essay is likely to be clearer and more accurate. This direct interpretability is a significant step forward, a true "vance" in making these complex systems more transparent and effective.

This approach often uses things like attention mechanisms and Transformer networks, too. You might have heard of these. They're basically ways for the system to figure out which pieces of information are most important and how different parts of the data relate to each other. So, you know, it's not just about having a BEV layer, but also about using these smart techniques to make the most of the information within that layer. It's all about making sure the system pays attention to the right things at the right time, leading to much better outcomes for the car's ability to perceive its surroundings.

What Comes After BEV Vance?

If we think of BEV perception in that bird's eye view coordinate system as the stepping stone for self-driving solutions that rely mostly on vision, then something called Occupancy Network is seen as the next big achievement for pure vision-based self-driving technology. Occupancy Network, you know, first showed up last year, and it's building on the ideas that BEV brought to the table. It's about taking the visual understanding even further, moving the technology forward quite a bit.

Both BEV and Occupancy Network algorithms need to be able to pull out useful details from data, whether that data is 2D (like a regular image) or 3D (like a point cloud). They also need to be able to separate different kinds of information, you know, and combine details from various types of data. Handling information that changes over time is also a big part of it. And finally, they have to be able to build effective models and make good guesses about the world. It's a complex dance of data processing, but it's what makes these systems so powerful, showing a clear "vance" in capability.

Regardless of whether a self-driving system uses pre-made maps or tries to drive without them, the perception part of autonomous driving always needs to be able to figure out where objects are and what they mean. This object detection, you see, isn't necessarily tied to whether the system uses BEV or Transformer networks. So, the idea that "map-less systems all use xxx" isn't quite right. Map-less systems can, and often do, use these technologies, but they're not the only options. BEV itself isn't a brand new hot topic; its most common use is in 3D detection, which depends on the kind of input data it gets.

The Future of BEV Vance and Big Models

Around 2022 and 2023, Tesla, for instance, saw that there were some limitations with traditional 2D vision systems in self-driving cars, especially when it came to accurately mapping 2D images into a 3D understanding of the world. So, they introduced their BEV+Transformer network. This system, you know, works directly in the BEV space, building a complete picture of the whole scene. This allows the car to understand things like the size and direction of objects much more clearly. It was a pretty big step, moving the technology forward in a very practical way.

Right now, people are looking into how to make BEV systems even better by incorporating information that changes over time, which is called temporal BEV. They're also exploring how big models, similar to the ones used in advanced AI, can help with self-driving. It's a pretty exciting time for this kind of research. It turns out that putting effort into developing BEV technology back in 2021 was a really smart move. It was a very timely decision, you know, to invest in this particular area of self-driving tech. This ongoing exploration of temporal BEV and larger models truly signifies the "vance" of this field.

So, the path ahead for BEV technology, you know, seems to involve getting better at understanding how things move and change over time, and also figuring out how to use bigger, more powerful AI models to process all that information. It's a continuous process of learning and improvement, pushing the boundaries of what self-driving cars can perceive and understand about their environment. This steady progress is what keeps the field of autonomous vehicles moving forward, always seeking new ways to make cars smarter and safer.

Related Resources:

Detail Author:

- Name : Prof. Keon Vandervort

- Username : qschowalter

- Email : thompson.christina@yahoo.com

- Birthdate : 1995-01-23

- Address : 985 Ondricka Lodge Santaton, OR 37946

- Phone : 636.245.0328

- Company : Rosenbaum, Satterfield and Leffler

- Job : Orthotist OR Prosthetist

- Bio : Cumque excepturi aut eius aut libero rerum ipsa. Perferendis quam quos provident natus ut. Et qui qui suscipit. Voluptatem sit qui in ullam culpa.

Socials

tiktok:

- url : https://tiktok.com/@elias_lynch

- username : elias_lynch

- bio : A est ut qui iusto possimus asperiores asperiores esse.

- followers : 2819

- following : 1376

twitter:

- url : https://twitter.com/elias_lynch

- username : elias_lynch

- bio : Ratione accusantium quia alias ullam qui est. Ut aperiam odit error adipisci autem. Quaerat distinctio iste laboriosam non distinctio.

- followers : 5269

- following : 722

linkedin:

- url : https://linkedin.com/in/eliaslynch

- username : eliaslynch

- bio : Nemo voluptatibus repudiandae optio.

- followers : 4854

- following : 1050

facebook:

- url : https://facebook.com/elias_lynch

- username : elias_lynch

- bio : Est error inventore exercitationem. At quia dolores dicta aut similique.

- followers : 680

- following : 2733

instagram:

- url : https://instagram.com/lynche

- username : lynche

- bio : Aut dolores nihil quidem qui enim. Voluptatem ea voluptatem in. Harum dolorem voluptatem atque eum.

- followers : 1125

- following : 72